Rather than testing in the same way every time it can be a good idea to use the product in the way a certain type of person would. For example, use a website as someone who only uses the keyboard to navigate, so all actions have to be done without the use of a mouse. Or as someone who always changes their mind, so keep using the back button to revisit pages. The first is likely to reveal accessibility/usability issues and the second could reveal innaproproate caching issues.

I realised the other day that if you can fully get into character then you can really experience and act out how certain users would feel using a product and thus reveal flaws or bugs which you otherwise might not discover.

What follows is a description of my findings when I adopted a persona to test a website without even realising it.

Last Tuesday I wanted to order 2 pizzas from Pizza Hut for my wife and I and I wanted to do it as soon as possible, in whatever way possible, and as cheaply as possible. I was very hungry and when I'm hungry my patience is severely reduced and any small frustrations are magnified.

So I wanted to get my order in as quickly and easily as possible. Was Pizza Hut online going to be up to the challenge?

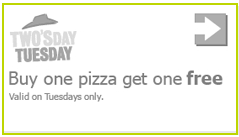

So the first thing I did was Google Pizza Hut. Up came a link to the Pizza Hut menu. Clicking this took me to a menu for all their pizzas which seemed like a good start. However, although I wanted Pizza fast I also wanted a good price and that meant getting the best deal I could. On the page there was no clear indication of any offers available. I didn't know where to find the deals so I gave up (remember, I was not in the mood to spend time searching around) and clicked on the 'Order Pizza' button, this took me to a page to select whether I want to Order for Delivery or Order for Collection. I chose delivery and selected the delivery time. Then I was taken to a page where I could select DEALS, Pizzas, Sides and Dips and Desserts and Drinks. Why did I have to get this far before I could find out that any Deals were even on offer?? So I clicked on Deals where I was presented with 11 different deals, including Buy 1 get 1 half price, £21.99 Medium Super Saver - 2 medium pizzas and 2 sides, £25.99 Medium Full Works for 2 medium pizzas, 2 classic sides, 2 desserts and 1 drink, Two'sday Tuesday - Buy one pizza get one free. Luckily for me, and for Pizza Hut, it was a Tuesday so I could get the best deal of 2 pizzas for the price of one.

So I chose a large Stuffed Crust Cajun chicken Sizzler for myself. This showed the Total cost of £17.49 in the 'Your Order' section of the screen. I then ordered a large Stuffed Crust Super Supreme at £19.49 for my wife. The only problem was the total for my order now showed as £36.98 'How much??' I said out loud, 'Where's my 2 for 1 deal gone?' 'You've just told me I'm getting a 2 for 1 deal and now where has it gone??' Maybe I'd pressed the wrong button or missed something so I pressed the browser back button a couple of times. I was hoping this would take me back to the page where I could restart my order and empty my basket. I was back at the menu page but my basket still showed £36.98! 'How do I empty my basket!!' By this point I was really getting annoyed and losing the will to live so I clicked the Checkout button below my basket total. This took me to another page where miraculously my deal was taking effect and a -£17.49 showed that I would only be paying for 1 pizza. 'Why couldn't they give me a clue I was doing the right thing on the previous page??'

Anyway, from here I managed to pay with my debit card with no disasters and my pizza even arrived on time.

From a testing point of view I found the 3 issues below:

Caching my choices and keeping them in my basket

Back button did not take me back to the original page

Price with offer was not shown until checkout button pressed

I'm not sure if any of these would be classed as a bug but it depends who's definition you use. I would include Cem Kaner's definition of a bug as 'something that would bug someone that matters', i.e. the customers.

The number of customers these issues bug and the financial impact associated with potential loss of sales is probably the more important question. Also, does the cost of fixing these issues outweigh the financial gain of keeping the sales? Product managers are the ones paid to answer these sorts of questions but they are interesting nonetheless.

In conclusion I found it very interesting looking back on the persona I had briefly adopted. It really changed the way I approached the website, which on another less hungry day, would have caused me no angst at all. More importantly it would possibly not have lead to me noticing any or as many issues with the way the site worked. I will aim to think up other personas for future use with my testing because, as demonstrated here, it will help find new and interesting software quirks which could otherwise be overlooked.

Thursday 6 December 2012

Sunday 4 November 2012

Context Switching and Multitasking

Although not specific to testing I feel that software development, particularly in an agile environment, can lead to a lot of inevitable priority changes, sometimes several times a day. Depending on the urgency of the new priorities this may mean people have to immediately stop focusing on whatever task they are currently involved with to start working on the latest precedence.

This change of attention from one task to another is known as context switching.

The Wikipedia definition of Context Switching refers to computers rather than humans but I feel the definition below can equally apply to people.

A context switch is the computing process of storing and restoring the state (context) of a CPU so that execution can be resumed from the same point at a later time. This enables multiple processes to share a single CPU. The context switch is an essential feature of a multitasking operating system.

Human multitasking is the best performance by an individual of appearing to handle more than one task at the same time. The term is derived from computer multitasking. An example of multitasking is taking phone calls while typing an email. Some believe that multitasking can result in time wasted due to human context switching and apparently causing more errors due to insufficient attention.

The notable word in the paragraph above is 'appearing' as I think multitasking, by definition, means one cannot devote 100% of one's attention to more than one task at a time. As I write this I happen to be watching Match of the Day 2 and I know that this blog is taking a lot longer to write than if I was not looking up every time a goal is scored! A perfect example of how not to multitask. In my opinion multitasking is rarely effective, where a focus of attention is required, in achieving time saved and a better quality of work, than working on the same tasks sequentially.

A very important aspect of context switching is not the fact that what you are doing changes, but the fact that some amount of time is used up in getting your mind into a state of focus and readiness for the new task. In testing, this may extend to getting your environment set up for the new task as well as having to start thinking about something new. With several context switches per day this wasted time can soon add up.

Interruptions can come in many forms such as meetings, background conversations, questions, lunch, phone calls, and e-mails to name a few. There are measures you can take to minimise these interruptions such as wearing headphones and turning off e-mail. Some people use The Pomodoro Technique so they should be left alone for at least 20 minutes at a time.

Personally, I feel there are positives in having at least two things to work on (but not at the same time) so that if what you're working on becomes blocked then you can start work on the second. The key is to remain in control of this switching so as to limit the time 'wasted' in re focusing on the new task.

One of my weaknesses (if it can be seen as such) is that I find it difficult to say no to someone who asks me to look into something for them. Sometimes I even welcome the interruption if I happen to be stuck in a rut with what I'm currently doing.

Going forward I feel I need to be more aware of my own context switches and I will be trying to reduce these only to those which are absolutely necessary or beneficial, and I would urge everyone to do the same.

This change of attention from one task to another is known as context switching.

The Wikipedia definition of Context Switching refers to computers rather than humans but I feel the definition below can equally apply to people.

A context switch is the computing process of storing and restoring the state (context) of a CPU so that execution can be resumed from the same point at a later time. This enables multiple processes to share a single CPU. The context switch is an essential feature of a multitasking operating system.

Wikipedia also has the following definition for human multitasking:

The notable word in the paragraph above is 'appearing' as I think multitasking, by definition, means one cannot devote 100% of one's attention to more than one task at a time. As I write this I happen to be watching Match of the Day 2 and I know that this blog is taking a lot longer to write than if I was not looking up every time a goal is scored! A perfect example of how not to multitask. In my opinion multitasking is rarely effective, where a focus of attention is required, in achieving time saved and a better quality of work, than working on the same tasks sequentially.

A very important aspect of context switching is not the fact that what you are doing changes, but the fact that some amount of time is used up in getting your mind into a state of focus and readiness for the new task. In testing, this may extend to getting your environment set up for the new task as well as having to start thinking about something new. With several context switches per day this wasted time can soon add up.

Interruptions can come in many forms such as meetings, background conversations, questions, lunch, phone calls, and e-mails to name a few. There are measures you can take to minimise these interruptions such as wearing headphones and turning off e-mail. Some people use The Pomodoro Technique so they should be left alone for at least 20 minutes at a time.

Personally, I feel there are positives in having at least two things to work on (but not at the same time) so that if what you're working on becomes blocked then you can start work on the second. The key is to remain in control of this switching so as to limit the time 'wasted' in re focusing on the new task.

One of my weaknesses (if it can be seen as such) is that I find it difficult to say no to someone who asks me to look into something for them. Sometimes I even welcome the interruption if I happen to be stuck in a rut with what I'm currently doing.

Going forward I feel I need to be more aware of my own context switches and I will be trying to reduce these only to those which are absolutely necessary or beneficial, and I would urge everyone to do the same.

Wednesday 3 October 2012

Are Two Testers Better than One?

This sounds like a rhetorical question but the answer is not quite as obvious as you'd think. By better I mean more productive in terms of doing more useful testing, uncovering more bugs and getting more product coverage.

A more interesting question I'd like to ask is 'Are two testers working together, i.e. pairing, better than two testers working independently?' I often see it working for developers in helping each other avoid mistakes so why not do the same with testing to increase the numbers of mistakes/bugs found?

For example, if there are a set of regression tests which need to be run, would it be more effective to split up the tests between two testers or have both testers work together?

From a time perspective it seems counter intuitive to believe that there is any chance that the tests will be completed in less time if the testers work together. If two people's time is occupied by each test then surely it could take just as long as if a single tester were used, thus doubling the cost of getting the work done.

The answer to the question depends on several factors:

How experienced is each tester?

What product knowledge do they each have?

How quickly/efficiently do they work?

Do they work well together?

Are they good communicators?

Are they familiar with the tests?

Do they think and work differently and use different methods from one another?

Do their skills complement one another?

Up until recently I have been doing all regression testing for the area I'm responsible for by myself and, to be frank, it can hard to keep it interesting and different each time.

However, for the last couple of regression tests I have sat side by side and worked with another tester and worked together on what are normally our own separate set of tests. I looked forward to trying this as, at the bare minimum, I'd have someone to talk to whilst testing. It was only once we began that I realised the various other benefits of pairing.

One of the main benefits I noticed first was in learning more about the area of the product in which my fellow tester specialises. I am generally responsible for one area of the product and although I know a lot about the other areas they are not my primary focus, so it was interesting to see how much I could learn from my colleague.

Another advantage was the amount of communication which went on between us so we knew what each other was doing and we could complement each other rather than duplicate the work.

Also, we avoided carrying out unnecessary testing which had already been covered as part of earlier feature testing and bug fixes. Without this interaction this time saving information would not have been imparted and regression testing would have taken longer than necessary.

There are several advantages I see to pair testing:

Other ideas this subject brings to mind are:

A more interesting question I'd like to ask is 'Are two testers working together, i.e. pairing, better than two testers working independently?' I often see it working for developers in helping each other avoid mistakes so why not do the same with testing to increase the numbers of mistakes/bugs found?

For example, if there are a set of regression tests which need to be run, would it be more effective to split up the tests between two testers or have both testers work together?

From a time perspective it seems counter intuitive to believe that there is any chance that the tests will be completed in less time if the testers work together. If two people's time is occupied by each test then surely it could take just as long as if a single tester were used, thus doubling the cost of getting the work done.

The answer to the question depends on several factors:

How experienced is each tester?

What product knowledge do they each have?

How quickly/efficiently do they work?

Do they work well together?

Are they good communicators?

Are they familiar with the tests?

Do they think and work differently and use different methods from one another?

Do their skills complement one another?

Up until recently I have been doing all regression testing for the area I'm responsible for by myself and, to be frank, it can hard to keep it interesting and different each time.

However, for the last couple of regression tests I have sat side by side and worked with another tester and worked together on what are normally our own separate set of tests. I looked forward to trying this as, at the bare minimum, I'd have someone to talk to whilst testing. It was only once we began that I realised the various other benefits of pairing.

One of the main benefits I noticed first was in learning more about the area of the product in which my fellow tester specialises. I am generally responsible for one area of the product and although I know a lot about the other areas they are not my primary focus, so it was interesting to see how much I could learn from my colleague.

Another advantage was the amount of communication which went on between us so we knew what each other was doing and we could complement each other rather than duplicate the work.

Also, we avoided carrying out unnecessary testing which had already been covered as part of earlier feature testing and bug fixes. Without this interaction this time saving information would not have been imparted and regression testing would have taken longer than necessary.

There are several advantages I see to pair testing:

- Increased creativity

- More efficient testing

- Time saving

- Improved communication

- Keep regression testing interesting

- Bounce ideas off one another

Other ideas this subject brings to mind are:

- Pair with different testers to mix things up and bring out new ideas and ways of working

- Try pairing on other areas of test such as bug fixes and story features

- Test with 2 or more other testers (may be impractical)

- Pair when doing exploratory testing

In conclusion I highly recommend trying pair testing even if only as an experiment; what is the worst that could happen?

Sunday 2 September 2012

Purposeful Regression Testing

Regression testing is a term that all testers ought to be familiar with but does it mean the same thing to all of us?

My view of regression testing has gradually been changing since I started as a software tester 8 months ago. When I first started out one of the first things I was introduced to was the regression tests we run for every new kit. This was a good place to start as it helped me build up my knowledge of the product and also get familiar with quite a wide coverage of tests. With each run of the regression tests I became more and more confident and found I could run through the tests quicker each time and with less of a need to read the test steps word for word. Although this was all good and positive I could also feel myself getting a bit too comfortable and did not feel I was putting enough thought into my regression testing.

It felt as though this testing was gradually turning into checking but at the same time I thought this must be the nature of the beast. However, running the same manual tests time after time is not what I'd call fun.

So what can we do to change things?

Of course, automation is one tool we can use to reduce the number of regression tests we run manually. Automation is especially useful when the tests are literally run in the same way following identical steps every time and the results are simple enough for a computer to understand.

Some of the tests cannot be automated, or if they were automated they could not be interpreted reliably by a computer. This may be due to timing issues in running the test or audio quality assessment of the output which we cannot (yet?) trust a computer to make a valid judgement on.

However, as well as these special cases, I believe that there will always be fundamental manual tests that must be carried out by a human to ensure confidence that the kit has the basics right, before moving on to the 'more interesting' testing.

Every tester knows that it is not possible to run every test variant on a product of any reasonable size, let alone get close to this with every set of regression tests. For this reason regression tests need to be carefully chosen to be of maximum use specifically to the kit in question. To continue to be useful I believe several factors need to be taken into account when selecting what tests to run as part of a regression suite. I would include the following in my areas for consideration:

Targeted and meaningful regression testing should include the core tests which cannot be automated but also take into account all of the factors above. The examples above are obviously very simplified so deciding what to test and for how long is not straightforward. I think part of the skill of being a tester is to weigh up all the factors and choose one's tests wisely rather than blindly following a regression test script. I'd like to think that's how regression testing is done in the majority of companies but I fear it may not.

My view of regression testing has gradually been changing since I started as a software tester 8 months ago. When I first started out one of the first things I was introduced to was the regression tests we run for every new kit. This was a good place to start as it helped me build up my knowledge of the product and also get familiar with quite a wide coverage of tests. With each run of the regression tests I became more and more confident and found I could run through the tests quicker each time and with less of a need to read the test steps word for word. Although this was all good and positive I could also feel myself getting a bit too comfortable and did not feel I was putting enough thought into my regression testing.

It felt as though this testing was gradually turning into checking but at the same time I thought this must be the nature of the beast. However, running the same manual tests time after time is not what I'd call fun.

So what can we do to change things?

Of course, automation is one tool we can use to reduce the number of regression tests we run manually. Automation is especially useful when the tests are literally run in the same way following identical steps every time and the results are simple enough for a computer to understand.

Some of the tests cannot be automated, or if they were automated they could not be interpreted reliably by a computer. This may be due to timing issues in running the test or audio quality assessment of the output which we cannot (yet?) trust a computer to make a valid judgement on.

However, as well as these special cases, I believe that there will always be fundamental manual tests that must be carried out by a human to ensure confidence that the kit has the basics right, before moving on to the 'more interesting' testing.

Every tester knows that it is not possible to run every test variant on a product of any reasonable size, let alone get close to this with every set of regression tests. For this reason regression tests need to be carefully chosen to be of maximum use specifically to the kit in question. To continue to be useful I believe several factors need to be taken into account when selecting what tests to run as part of a regression suite. I would include the following in my areas for consideration:

- Recent new product features - Areas of the product which have recently been created. Even though they may have had a thorough testing, in general, I would still want to focus on a new area rather than an older one. I would expect more bugs to be found lurking there as they are likely to have had less exposure to as many different variants of input as opposed to more established parts of the product.

- Customer impact - If I had to choose between testing only area A and area B and was told both were of equal size and that area A was considered essential to 70% of customers and area B only important to 30% I would choose to test area A.

- Time - We can obviously only perform a finite amount of testing within whatever time frame we have. So, looking at the example above, in simple terms if I had 100 minutes to test I would spend 70 minutes on area A and 30 minutes on area B.

- Bug fixes - Even when bugs have been fixed and successfully retested I still like to focus on these areas as they are effectively newer areas of code now so the same principle applies to that of new feature areas.

- Refactoring - Again for the same reasons as new features and bug fixes.

Targeted and meaningful regression testing should include the core tests which cannot be automated but also take into account all of the factors above. The examples above are obviously very simplified so deciding what to test and for how long is not straightforward. I think part of the skill of being a tester is to weigh up all the factors and choose one's tests wisely rather than blindly following a regression test script. I'd like to think that's how regression testing is done in the majority of companies but I fear it may not.

Monday 6 August 2012

Thinking, Fast and Slow - Book Review

Over the past few weeks I have been reading a book called Thinking, Fast and Slow by Daniel Kahnemann.

This book was recommended to me by a good friend of mine who works as a software tester for another company. He told me that it would change the way I think about how our minds work, and indeed it has.

As the title suggests, Daniel Kahnemann describes how our minds are split into two main systems which we use when we think and make decisions. He refers to these as System 1 and System 2. System 1 is described as automatic and subconscious. So when we feel we are acting on our instincts, gut reactions or hunches, we are said to be using our (fast) System 1 to make these decisions. When we act on instinct we do not take a step back to analyse the situation before coming to the decision we make, we just feel that it's right. Often, this is exactly how we want our minds to work. For example, if someone throws a ball in your direction you need to make a quick decision as to whether you're going to dodge or catch the ball. Not a lot of conscious thought is going into the decision as there is not enough time to think about what action you will take.

There are other situations where taking your time before acting is much more appropriate. For example, if you're looking to buy a new car you won't make a quick choice based on looks alone, you will want to know think about many aspects such as performance, economy, mileage etc. Therefore, before making your choice you will have to use your (slow) conscious System 2.

It is the occasions where we don't feel a decision requires a great amount of thought which can lead to errors of judgement. Something may seem simple and obvious on the face of it but only when you really apply conscious time and thought do you see things more clearly. This is a good point to bear in mind, especially when testing software.

One of the mistakes we are prone make is to let our own personal experiences of events bias our view of the probability that those events will happen in the future. For example, if you have a family history of heart attacks and you are asked what percentage of deaths nationally are caused by heart attack then chances are you will overestimate the likelihood when compared to someone who has no personal experience of heart attacks. This is known as the Availability heuristic, since instances which come to mind (are available) lead us to think that events are more common than they are in reality.

When we have personal experience of a subject we must not let that influence our view of the facts, however, this is easier said than done. Another similar idea which is related to the Availability heuristic is the acronym WYSIATI, which stands for 'What you see is all there is'. We each have our own view of the world and everyone's view is different. We often don't look or investigate any further than what we've seen personally as we don't always believe there is more to see.

We need to train ourselves to think about the bigger picture as there is often a lot more going on that we don't realise just because we haven't paid attention to it.

Below I've briefly described a few of the many ideas Daniel talks about in the book which are useful to bear in mind, especially when applied to software testing.

Sample size

One way in which it can be very easy to arrive at an incorrect conclusion is when judgements are made based on a small sample size. The sample size should be large enough so that natural fluctuations in results do not skew the overall result. For example, if you toss a coin 1000 times, as well as being very bored and worn out, the percentage of times you see heads should not be far from 50%. However, if you only toss the coin 10 times, you could quite easily see 7 heads out of 10. We all know that the probability of seeing a head is 50% but when we don't know the probability we need to choose a sample large enough to eradicate the influence of natural fluctuations in results.

Answering an easier question

When you are trying to answer a difficult question or one that you do not have much knowledge about it can be natural to give an answer based on what knowledge we do have about something linked to the question. For example, if you are asked the question 'How happy are you with your life?' you are likely to give an answer based on how you feel about things right now rather than thinking more objectively about your life as a whole since birth.

Regression to the mean

Sometimes people can misinterpret a correlation between events happening as causal just because they both occurred at the same time. Daniel explains that it's important to bear in mind that measurements of a particular category tend to create a bell shaped curve (see below) over time. So there will be fewer results at the extremes with the majority falling somewhere between these two extremes. Due to this fact there is a general regression to the mean, or average result.

This can explain why, more often than not, punishment of a bad result or low score is followed by an improvement, and rewarding success is followed by a worsening in performance. This phenomenon can result in the misbelief that it was the punishment that caused the improvement or the reward that lead to the deterioration. This is a great shame as many employers are not aware of regression to the mean and believe that their punishment of poor performance is always responsible for the subsequent improvement, so they have no reason to change this behaviour.

Since reading Thinking, Fast and Slow I've found myself thinking about real life situations where I can apply the principles described. Even when you have read the book and have the knowledge of how our minds work it still seems unavoidable to fall into a lot of the traps which our brains appear hardwired to make us susceptible to. But to have the knowledge about how our minds work and the flaws that exist can be empowering and should lead to more comprehensive testing.

This book was recommended to me by a good friend of mine who works as a software tester for another company. He told me that it would change the way I think about how our minds work, and indeed it has.

As the title suggests, Daniel Kahnemann describes how our minds are split into two main systems which we use when we think and make decisions. He refers to these as System 1 and System 2. System 1 is described as automatic and subconscious. So when we feel we are acting on our instincts, gut reactions or hunches, we are said to be using our (fast) System 1 to make these decisions. When we act on instinct we do not take a step back to analyse the situation before coming to the decision we make, we just feel that it's right. Often, this is exactly how we want our minds to work. For example, if someone throws a ball in your direction you need to make a quick decision as to whether you're going to dodge or catch the ball. Not a lot of conscious thought is going into the decision as there is not enough time to think about what action you will take.

There are other situations where taking your time before acting is much more appropriate. For example, if you're looking to buy a new car you won't make a quick choice based on looks alone, you will want to know think about many aspects such as performance, economy, mileage etc. Therefore, before making your choice you will have to use your (slow) conscious System 2.

It is the occasions where we don't feel a decision requires a great amount of thought which can lead to errors of judgement. Something may seem simple and obvious on the face of it but only when you really apply conscious time and thought do you see things more clearly. This is a good point to bear in mind, especially when testing software.

One of the mistakes we are prone make is to let our own personal experiences of events bias our view of the probability that those events will happen in the future. For example, if you have a family history of heart attacks and you are asked what percentage of deaths nationally are caused by heart attack then chances are you will overestimate the likelihood when compared to someone who has no personal experience of heart attacks. This is known as the Availability heuristic, since instances which come to mind (are available) lead us to think that events are more common than they are in reality.

When we have personal experience of a subject we must not let that influence our view of the facts, however, this is easier said than done. Another similar idea which is related to the Availability heuristic is the acronym WYSIATI, which stands for 'What you see is all there is'. We each have our own view of the world and everyone's view is different. We often don't look or investigate any further than what we've seen personally as we don't always believe there is more to see.

We need to train ourselves to think about the bigger picture as there is often a lot more going on that we don't realise just because we haven't paid attention to it.

Below I've briefly described a few of the many ideas Daniel talks about in the book which are useful to bear in mind, especially when applied to software testing.

Sample size

One way in which it can be very easy to arrive at an incorrect conclusion is when judgements are made based on a small sample size. The sample size should be large enough so that natural fluctuations in results do not skew the overall result. For example, if you toss a coin 1000 times, as well as being very bored and worn out, the percentage of times you see heads should not be far from 50%. However, if you only toss the coin 10 times, you could quite easily see 7 heads out of 10. We all know that the probability of seeing a head is 50% but when we don't know the probability we need to choose a sample large enough to eradicate the influence of natural fluctuations in results.

Answering an easier question

When you are trying to answer a difficult question or one that you do not have much knowledge about it can be natural to give an answer based on what knowledge we do have about something linked to the question. For example, if you are asked the question 'How happy are you with your life?' you are likely to give an answer based on how you feel about things right now rather than thinking more objectively about your life as a whole since birth.

Regression to the mean

Sometimes people can misinterpret a correlation between events happening as causal just because they both occurred at the same time. Daniel explains that it's important to bear in mind that measurements of a particular category tend to create a bell shaped curve (see below) over time. So there will be fewer results at the extremes with the majority falling somewhere between these two extremes. Due to this fact there is a general regression to the mean, or average result.

This can explain why, more often than not, punishment of a bad result or low score is followed by an improvement, and rewarding success is followed by a worsening in performance. This phenomenon can result in the misbelief that it was the punishment that caused the improvement or the reward that lead to the deterioration. This is a great shame as many employers are not aware of regression to the mean and believe that their punishment of poor performance is always responsible for the subsequent improvement, so they have no reason to change this behaviour.

Since reading Thinking, Fast and Slow I've found myself thinking about real life situations where I can apply the principles described. Even when you have read the book and have the knowledge of how our minds work it still seems unavoidable to fall into a lot of the traps which our brains appear hardwired to make us susceptible to. But to have the knowledge about how our minds work and the flaws that exist can be empowering and should lead to more comprehensive testing.

Sunday 1 July 2012

Take it with a pinch of salt

One lesson that I'm learning fast as a software tester is that you won't improve if you take everything you see, hear and read at face value.

In the interests of saving time and/or effort this is a very easy trap to fall into. Perhaps the time when one is most susceptible to this is when you are inexperienced and are at the mercy of all the new information you are being bombarded with. Ironically, this could potentially be more harmful for those with more experience and responsibility, who believe they know everything there is to know and therefore stop asking questions.

It sometimes feels like there is no other option than to believe the information you're being given at face value. At least if it's not acccurate you can use it as a baseline which can be modified as new information comes to light.

One example of where I fell into this trap was when I had some testing to do on a new feature and I wasn't sure if the testing had to be done on all of the environments we use. I didn't want to spend time duplicating my tests in different areas if this was not necessary. As much as it would be ideal to test everything in every possible scenario and environment, this simply is not possible, so we try to fit the best coverage we can into our timescales. So I asked someone if it mattered which environment I used to test the new feature on and they told me it didn't. The person I asked has a lot more experience than myself so I felt I had no reason to question what I was told. I duly carried out my testing and reported the results, not realising I had not been testing exactly what I thought I had. In hindsight, if I'd asked a couple more questions I probably would have realised what should have been tested. So I learnt an important lesson that it's much better to ask one question too many, at the risk of looking stupid, and get things right, than to avoid that question and end up making yourself look really stupid.

Another example where information can be misconstrued are bug descriptions. It is very easy to think that the report you're writing about the bug you saw 5 minutes ago is as descriptive written down as it is reproducible in your mind. When you've forgotten about that bug and then read your report a month later is it still as clear? Even more importantly, is it clear to someone else?

I'm not saying people shouldn't be trusted but you need to know what they mean when what they've said or written looks to you like they mean something else.

I think the best way to combat this pitfall is to continue to ask questions until you are confident that there is no ambiguity left in your mind about what the facts are. This attitude and the frequency of questioning should not diminish with experience and if anything should probably increase.

In the interests of saving time and/or effort this is a very easy trap to fall into. Perhaps the time when one is most susceptible to this is when you are inexperienced and are at the mercy of all the new information you are being bombarded with. Ironically, this could potentially be more harmful for those with more experience and responsibility, who believe they know everything there is to know and therefore stop asking questions.

It sometimes feels like there is no other option than to believe the information you're being given at face value. At least if it's not acccurate you can use it as a baseline which can be modified as new information comes to light.

One example of where I fell into this trap was when I had some testing to do on a new feature and I wasn't sure if the testing had to be done on all of the environments we use. I didn't want to spend time duplicating my tests in different areas if this was not necessary. As much as it would be ideal to test everything in every possible scenario and environment, this simply is not possible, so we try to fit the best coverage we can into our timescales. So I asked someone if it mattered which environment I used to test the new feature on and they told me it didn't. The person I asked has a lot more experience than myself so I felt I had no reason to question what I was told. I duly carried out my testing and reported the results, not realising I had not been testing exactly what I thought I had. In hindsight, if I'd asked a couple more questions I probably would have realised what should have been tested. So I learnt an important lesson that it's much better to ask one question too many, at the risk of looking stupid, and get things right, than to avoid that question and end up making yourself look really stupid.

Another example where information can be misconstrued are bug descriptions. It is very easy to think that the report you're writing about the bug you saw 5 minutes ago is as descriptive written down as it is reproducible in your mind. When you've forgotten about that bug and then read your report a month later is it still as clear? Even more importantly, is it clear to someone else?

I'm not saying people shouldn't be trusted but you need to know what they mean when what they've said or written looks to you like they mean something else.

I think the best way to combat this pitfall is to continue to ask questions until you are confident that there is no ambiguity left in your mind about what the facts are. This attitude and the frequency of questioning should not diminish with experience and if anything should probably increase.

Wednesday 30 May 2012

Variety is the spice of Test

One of the reasons I decided to get into software testing was the appeal it had as a job which would keep me interested and not become repetitive and boring, as so many jobs can be. I think software testing can be as interesting as you want to make it, within reason. My main caveat would be that you have a much better chance of achieving job satisfaction if the company you work for has the right attributes of a forward thinking company, such as one which adheres to as many of 'Deming's 14 Points' as possible; which can be seen at the following web page (http://leanandkanban.wordpress.com/2011/07/15/demings-14-points/)

One of the main factors I've found in maintaining interest in is continual reading around the subject of software testing, including not only books specifically about testing, but also books covering wider subjects such as the industry you're in or books about how our minds work. Although not directly related to testing, the knowledge gained from many other areas can be useful to have in the back of your mind when testing. One example is Thinking, Fast and Slow, by Daniel Kahneman, which is a book about how we appear to have two distinct ways of thinking about things, the instinctive gut reaction (thinking fast) and then secondly the more conscious considered approach (thinking slow). I intend to write about my thoughts on this book in a future blog.

I started using Twitter about 6 months ago and have found it a great way to access lots of current thoughts, trends and blogs from people who have vast experience of software testing. There is no shortage of views and ideas and twitter has become part of my daily routine.

Although testing our product is classed as my main role, there are lots of interactions with other areas of the company necessary to fulfil my role as a tester. As we're all based in the same building those interactions are usually immediate and face to face so feedback loops are short and progress is made quickly. We also release new kits on a regular basis and constantly add new, and improve existing features. So although we have a stable product, we as individuals and as a company are in a constant state of progression in terms of continually improving and polishing our skills and our service. This goes not only for myself and the test team but everyone in the company; we are all pulling in the same direction.

So my job not only involves testing, it also involves meetings with the product owners to discuss upcoming product enhancements and planning how best to test them. It also involves working closely with developers to get visibility of the features as they are created first hand and as early as possible. That way I can find out how they intend to code the requirements, with an eye on testability and automation of tests where possible. The more of the predictable, repetitive tests that can be automated the better as that leaves the more unusual scenarios, which only a human tester can carry out and/or interpret. That is the sort of testing I want to be doing as it engages my mind and allows me to be creative.

It's this variety which keeps my job interesting and I hope any non testers who read this can take it from me that getting into software testing is well worth the effort.

One of the main factors I've found in maintaining interest in is continual reading around the subject of software testing, including not only books specifically about testing, but also books covering wider subjects such as the industry you're in or books about how our minds work. Although not directly related to testing, the knowledge gained from many other areas can be useful to have in the back of your mind when testing. One example is Thinking, Fast and Slow, by Daniel Kahneman, which is a book about how we appear to have two distinct ways of thinking about things, the instinctive gut reaction (thinking fast) and then secondly the more conscious considered approach (thinking slow). I intend to write about my thoughts on this book in a future blog.

I started using Twitter about 6 months ago and have found it a great way to access lots of current thoughts, trends and blogs from people who have vast experience of software testing. There is no shortage of views and ideas and twitter has become part of my daily routine.

Although testing our product is classed as my main role, there are lots of interactions with other areas of the company necessary to fulfil my role as a tester. As we're all based in the same building those interactions are usually immediate and face to face so feedback loops are short and progress is made quickly. We also release new kits on a regular basis and constantly add new, and improve existing features. So although we have a stable product, we as individuals and as a company are in a constant state of progression in terms of continually improving and polishing our skills and our service. This goes not only for myself and the test team but everyone in the company; we are all pulling in the same direction.

So my job not only involves testing, it also involves meetings with the product owners to discuss upcoming product enhancements and planning how best to test them. It also involves working closely with developers to get visibility of the features as they are created first hand and as early as possible. That way I can find out how they intend to code the requirements, with an eye on testability and automation of tests where possible. The more of the predictable, repetitive tests that can be automated the better as that leaves the more unusual scenarios, which only a human tester can carry out and/or interpret. That is the sort of testing I want to be doing as it engages my mind and allows me to be creative.

It's this variety which keeps my job interesting and I hope any non testers who read this can take it from me that getting into software testing is well worth the effort.

Sunday 29 April 2012

April 2012 - Time is Precious

One of the natural consequences of working in software development is that requirements can change at any given time. Because we're an Agile team working in an Agile environment this is not a big problem as it means we are prepared and able to react quickly to the changing demands of management and customers. If we were working in a Waterfall environment (which I've heard about but not experienced for myself) I imagine we would find it a lot harder to adapt to such short notice changes. Therefore, we are not always in control of how much time we will have to carry out development and testing. I don't see this as a negative as it just means we have to be adaptive and flexible. I have also found that it brings out the creativity in the team and makes for a challenging and rewarding experience.

On a team level and also on a personal level this means that we have to make the best use of the, sometimes limited, time we have. In an ideal world customers would work to dates we could set them and not mind if the dates kept slipping out so we could take our time to create even more polished applications. Obviously, in reality, this would never be the case as customers want to move fast and beat the competition to market. They want to have use of a product we can provide them before they find a similar product created by one of our competitors.

To achieve this we must have all areas of the company working together to meet the customers requirements. When this happens and we all pull together to realise what the customer wants and when they want it, it is very satisfying knowing that we are driving the company forward by meeting customer expectations and improving our reputation.

One of the conclusions I am coming to is that there's nothing like a deadline to focus the mind. I'm not thinking of this in terms of cramming everything in, such as doing your revision last minute on the night before the exam. But rather planning what needs to be done today, what needs to be done this week, and also what contingency plans do we have if requirements change. I don't think deadlines necessarily need to be dictated by customers either. When the implementation of a piece of work is not directly customer dependent it should not mean that we rest on our laurels until the next 'real' deadline comes along. If we can commit to a target date for all tasks undertaken then we should be able to have a gauge, at least in our minds if not visible to the team, of how our tasks are progressing in relation to the deadline we have committed to. Commitment based tasks are something we are considering where I work so the results should be interesting. The theory is that our time management and work rate ought to improve as we have a defined static target to aim at.

On a team level and also on a personal level this means that we have to make the best use of the, sometimes limited, time we have. In an ideal world customers would work to dates we could set them and not mind if the dates kept slipping out so we could take our time to create even more polished applications. Obviously, in reality, this would never be the case as customers want to move fast and beat the competition to market. They want to have use of a product we can provide them before they find a similar product created by one of our competitors.

To achieve this we must have all areas of the company working together to meet the customers requirements. When this happens and we all pull together to realise what the customer wants and when they want it, it is very satisfying knowing that we are driving the company forward by meeting customer expectations and improving our reputation.

One of the conclusions I am coming to is that there's nothing like a deadline to focus the mind. I'm not thinking of this in terms of cramming everything in, such as doing your revision last minute on the night before the exam. But rather planning what needs to be done today, what needs to be done this week, and also what contingency plans do we have if requirements change. I don't think deadlines necessarily need to be dictated by customers either. When the implementation of a piece of work is not directly customer dependent it should not mean that we rest on our laurels until the next 'real' deadline comes along. If we can commit to a target date for all tasks undertaken then we should be able to have a gauge, at least in our minds if not visible to the team, of how our tasks are progressing in relation to the deadline we have committed to. Commitment based tasks are something we are considering where I work so the results should be interesting. The theory is that our time management and work rate ought to improve as we have a defined static target to aim at.

Monday 26 March 2012

March 2012 - Phases of Testing

As it becomes more instinctive for me to decide what part of our application to test next, e.g. smoke testing a new kit, what bug fixes to concentrate on, or new story features to explore; I can start to focus more attention on exactly how I'm going about my testing. This includes the preparation/planning, the main phase of testing itself, and the reporting of my findings afterwards.

Pre-Testing

I have started to get into the habit of using Mind Maps (XMind) to get the main topics down in black and white. Although it's a very straight forward premise I find it very useful as I can easily forget even the brightest idea pretty quickly if I don't write it down. Mind Maps also double as useful information for future reference. So anyone who wishes to test a feature they haven't seen before can view the Mind Map for test ideas to get them started. I find them of particular use when testing a brand new feature or area of the product with which I am less familiar.

Testing

When I first started testing I was paying more attention to what was going on in front of me on the screen rather than making notes of what I saw. This was very bad practice as memory tends to be a lot less reliable than a written word. The smallest changes in steps to reproduce a bug or unusual behaviour will mean you can no longer produce the desired result. However memorable it seems at the time there is no substititute for recording your findings. I have now started to try and make an effort to make notes when I test now (using Notepad++), especially when testing something new in an exploratory way as it could prove invaluable when trying to recall steps in producing any bugs found.

I also tend to use Firefox a little more than other browsers so that I can have firebug running in the background. Then I can include the firebug error output along with my description when I make a bug report, which can sometimes be useful for developers in pinpointing the origin or cause of the defect.

Screen grab tools and videos are also very useful to back up a description and can often make things a lot clearer than a written description alone in visualising what's gone on.

Re-Testing

Under this heading I include retesting in the sense of Regression testing and also Re-Testing bugs which are believed to have been fixed.

When I run regression tests I do not make as many notes since the paths followed are already well trodden and the steps taken are already recorded step by step. However I use the same methods for recording findings if a bug is found whilst running regression tests.

When Re-Testing bug fixes it can be very easy to fall into the trap of only testing the 'happy path', or the scenario in which the bug was originally found. It's also important to test around the edges of the happy path and test the bug scenario with similar but not identical variations in order to be confident the bug(s) have been fully fixed and will not resurface later on. The number of different paths you can test is obviously time dependent but the more you have time for the better.

Reporting Test Findings

Once testing is complete it is important to quantify what areas of the product have been tested and in what way. This is especially important when describing how to reproduce a bug as if this is not accurate then the developers may not be able to reproduce it for themselves. The steps to reproduce are also important when the bug is fixed and you need to repeat the original steps used to create the bug. You must also make sure you include all relevant setup information such as the browser which was used, the kit version, user credentials, scenario, and any accompanying event logs. This will give the developers the best chance of identifying and reproducing the bug and reduces the chance they will need to come back to you with further questions. It should also minimise the chance of the hearing the well known 'It works on my machine' response.

Wednesday 21 March 2012

February 2012 - Why I made the right choice

I have now been a Software Tester for two months. It seems like only yesterday I started, as the time has gone so fast, but at the same time, I have probably learnt more in the last two months than I did during the last year at my previous job. This says a lot about the difference between my previous employer and my new employer and how they make me feel about going to work.

I think the word Agile probably sums up the difference. Although this is generally a Software Development methodology I think the values below (taken from the Agile Manifesto) can and should apply to multiple industries:

Individuals and interactions over processes and tools Working software over comprehensive documentation Customer collaboration over contract negotiation Responding to change over following a plan

I think the word Agile probably sums up the difference. Although this is generally a Software Development methodology I think the values below (taken from the Agile Manifesto) can and should apply to multiple industries:

I think the list above highlights quite accurately why the way things are done where I work now make much more sense than the monotonous planning, processes, documents and contracts my former bosses seemed disinclined to get us away from.

Where I now work there is a lot of emphasis on learning both through colleagues but also by self driven education. When there is a positive atmosphere where everyone wants to learn and use their knowledge to help others it instills in me a desire to improve my own knowledge outside of work. I think it helps that Software Development and Testing are such a vast and ever expanding area which the world is becoming increasingly reliant upon, as companies go online and start using the cloud, rather than the high street, to sell their wares. In hindsight I probably would have gone down the Computer Science rather than Sports Science route at University.

As a tester, one of the main lessons I've learnt so far is that there are some core aspects of working in a Test team which must be right to allow a smooth and efficient delivery of work. For me, two of the most important aspects are communication and time management.

Communication so that everyone has a good idea what other individuals in the team are working on and therefore tasks are not duplicated. Also, to be aware of what other teams are working on and how that may affect what your own team is doing. We achieve this through daily team stand ups followed by a scrum of scrums stand up. This means that every individual need not listen to every other individuals update which saves a lot of valuable time.

It really helps that we sit in very close proximity to the developers so that as soon as a bug or issue surfaces we can immediately grab one to demonstrate the problem and they can look into it straight away. Sometimes bugs are known about and a fix is already in the pipeline, or they can be put down to a limitation with the system or feature which hasn't been built yet rather than a fault in the software. I'm sure as time goes on I will come to learn more of these possibilities and will become one less bug for the developers myself.

I've also learnt that effective time management is very important in testing as time can very easily be eaten up by going off on tangents. By this I mean you have to be focused on the task at hand, whether that is testing a bug is fixed, testing a new feature or exploring a new area of the software. In any of these situations you can easily spot a bug or unusual behaviour and decide to investigate a little further. Before you know it you have lost half an hour and wonder where it went. This can have a doubly negative effect in that you have lost focus on your original task and you are probably not 100% focused on the new quirk you have found as you know you have digressed and ought to get back to what you should be doing. Now, when I spot something new I will make a quick note of it as something to look into later when I can allocate time specifically to it, as tempting as it may be to pursue at the time of discovery.

After two months Testing I really feel part of the Development team and appreciate the way the team works. I've learnt a lot and am sure I won't stop learning whilst I'm still a Software Tester. I'm quite sure I have not written anything revelationary as yet. Hopefully over time my posts will offer more insightful views and I'll be able to talk more about experiences that other testers may value.

Where I now work there is a lot of emphasis on learning both through colleagues but also by self driven education. When there is a positive atmosphere where everyone wants to learn and use their knowledge to help others it instills in me a desire to improve my own knowledge outside of work. I think it helps that Software Development and Testing are such a vast and ever expanding area which the world is becoming increasingly reliant upon, as companies go online and start using the cloud, rather than the high street, to sell their wares. In hindsight I probably would have gone down the Computer Science rather than Sports Science route at University.

As a tester, one of the main lessons I've learnt so far is that there are some core aspects of working in a Test team which must be right to allow a smooth and efficient delivery of work. For me, two of the most important aspects are communication and time management.

Communication so that everyone has a good idea what other individuals in the team are working on and therefore tasks are not duplicated. Also, to be aware of what other teams are working on and how that may affect what your own team is doing. We achieve this through daily team stand ups followed by a scrum of scrums stand up. This means that every individual need not listen to every other individuals update which saves a lot of valuable time.

It really helps that we sit in very close proximity to the developers so that as soon as a bug or issue surfaces we can immediately grab one to demonstrate the problem and they can look into it straight away. Sometimes bugs are known about and a fix is already in the pipeline, or they can be put down to a limitation with the system or feature which hasn't been built yet rather than a fault in the software. I'm sure as time goes on I will come to learn more of these possibilities and will become one less bug for the developers myself.

I've also learnt that effective time management is very important in testing as time can very easily be eaten up by going off on tangents. By this I mean you have to be focused on the task at hand, whether that is testing a bug is fixed, testing a new feature or exploring a new area of the software. In any of these situations you can easily spot a bug or unusual behaviour and decide to investigate a little further. Before you know it you have lost half an hour and wonder where it went. This can have a doubly negative effect in that you have lost focus on your original task and you are probably not 100% focused on the new quirk you have found as you know you have digressed and ought to get back to what you should be doing. Now, when I spot something new I will make a quick note of it as something to look into later when I can allocate time specifically to it, as tempting as it may be to pursue at the time of discovery.

After two months Testing I really feel part of the Development team and appreciate the way the team works. I've learnt a lot and am sure I won't stop learning whilst I'm still a Software Tester. I'm quite sure I have not written anything revelationary as yet. Hopefully over time my posts will offer more insightful views and I'll be able to talk more about experiences that other testers may value.

January 2012 - New Year, New Career in Software Testing

Hello, my name is Andrew. At the beginning of the year, 2012, I entered the big wide world of software testing. Although I am obviously new to this arena I am quite confident that my use of the words big and wide are unlikely to be in debate. However, I welcome any debate or correction on anything I write here.

In my short time in this industry, I have become aware that software testing is regarded as different things to different people. From what I can gather the majority of software testers fall into two broad camps; for the purposes of argument I will call one group 'Checkers' and the other 'Testers'.

The 'Checkers' see software testing as a mundane day job in which they follow a set of instructions from which they have no interest in deviating. They believe they are not paid to do any more than this and have no particular desire to.

The 'Testers' are in complete contrast to the 'Checkers'. They see their job as a challenge and look forward to trying new ways of testing every day. They enjoy their job because they get a feeling of fulfillment from taking on new challenges and doing things differently and with variety. These two categories are obviously rather generalised and at two opposite ends of a spectrum. I think that the reality probably falls somewhere in between these two extreme ways of working.

My previous background is in Telecoms, I won't mention which provider, as I don't wish to confirm what are probably correct assumptions. All I can say is that the cultures of my previous workplace and that of my new employer are worlds apart. Although the work was not software testing I can still recognise that the mindset of most people was anagalous to that of a 'Checker'. I would say that any ambitions people had were made very difficult to realise by the working culture that surrounded them.

I believe that it is largely down to the individual to take responsibility for which way of working they choose to follow. Although it is not the employers responsibility to make an employee think in a certain way, they can do much to support them by providing the tools, resources, training, people and culture which will all make a tester more conducive to progressing as a 'Tester' rather than becoming a 'Checker'. As is probably clear I am very much in favour of staying as close as I can to the Tester end of this scale. I am happy to report that my new employer does fulfill the supporting role I feel I need to enable my testing career to progress and flourish. This very blog is one prime example of this. I have never written a blog before but having read others I feel a desire to record my thoughts, if not for others to read, then at least to keep as a record for myself of my views as my testing career progresses.

In my short time in this industry, I have become aware that software testing is regarded as different things to different people. From what I can gather the majority of software testers fall into two broad camps; for the purposes of argument I will call one group 'Checkers' and the other 'Testers'.

The 'Checkers' see software testing as a mundane day job in which they follow a set of instructions from which they have no interest in deviating. They believe they are not paid to do any more than this and have no particular desire to.

The 'Testers' are in complete contrast to the 'Checkers'. They see their job as a challenge and look forward to trying new ways of testing every day. They enjoy their job because they get a feeling of fulfillment from taking on new challenges and doing things differently and with variety. These two categories are obviously rather generalised and at two opposite ends of a spectrum. I think that the reality probably falls somewhere in between these two extreme ways of working.

My previous background is in Telecoms, I won't mention which provider, as I don't wish to confirm what are probably correct assumptions. All I can say is that the cultures of my previous workplace and that of my new employer are worlds apart. Although the work was not software testing I can still recognise that the mindset of most people was anagalous to that of a 'Checker'. I would say that any ambitions people had were made very difficult to realise by the working culture that surrounded them.

I believe that it is largely down to the individual to take responsibility for which way of working they choose to follow. Although it is not the employers responsibility to make an employee think in a certain way, they can do much to support them by providing the tools, resources, training, people and culture which will all make a tester more conducive to progressing as a 'Tester' rather than becoming a 'Checker'. As is probably clear I am very much in favour of staying as close as I can to the Tester end of this scale. I am happy to report that my new employer does fulfill the supporting role I feel I need to enable my testing career to progress and flourish. This very blog is one prime example of this. I have never written a blog before but having read others I feel a desire to record my thoughts, if not for others to read, then at least to keep as a record for myself of my views as my testing career progresses.

Subscribe to:

Posts (Atom)